Have you ever wondered if it is possible to tell how someone is feeling just by looking at their face? The answer is yes! Facial characteristics can provide valuable cues about an individual’s emotions.

Researchers have extensively studied the connection between facial expressions and emotions, leading to incredible discoveries and applications in various fields such as psychology, marketing, and even artificial intelligence. In this article, we will dive into the fascinating world of emotions and how they can be uncovered through facial features and expressions.

The Universal Language of Facial Expressions

When it comes to emotions, there is a universal language that transcends cultural differences – facial expressions.

The renowned psychologist Paul Ekman conducted extensive research on emotions across different cultures and found that there are six primary emotions that people from all over the world can recognize: happiness, sadness, anger, fear, surprise, and disgust.

The Role of Facial Muscles

To understand how facial expressions reveal emotions, it is crucial to learn about the muscles responsible for these movements. The human face has more than 40 muscles, and when activated, they create various expressions.

These muscles can be classified into two types: voluntary and involuntary. Voluntary muscles are under our conscious control, while involuntary muscles are not.

When experiencing different emotions, specific facial muscles contract or relax, resulting in distinctive facial expressions.

For example, when someone is happy, the zygomaticus major muscle, which controls the movement of the mouth, pulls the corners of the lips upward, leading to a smile.

Microexpressions: The Window to Hidden Emotions

Microexpressions are brief facial expressions that occur spontaneously and unconsciously, lasting only a fraction of a second. They are termed “micro” because they are often too fast for the untrained eye to detect.

However, with the help of advanced technology and training, experts can uncover these microexpressions and gain insights into a person’s true emotions, even if they are trying to conceal them.

Microexpressions can betray true feelings, as they are difficult to control, unlike deliberate expressions that can be consciously manipulated.

Detecting microexpressions requires training and proficiency in recognizing the fleeting facial cues associated with specific emotions.

Machine Learning and Emotion Recognition

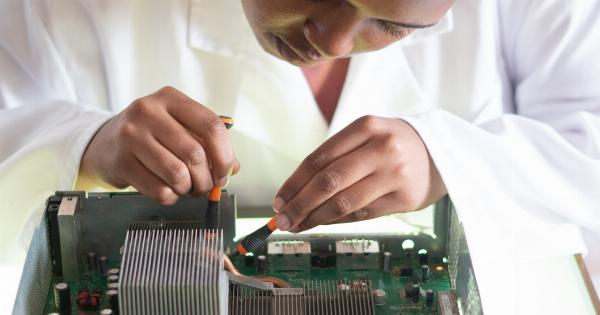

Advancements in technology, particularly in the field of machine learning, have brought about exciting possibilities for emotion recognition from facial characteristics.

Researchers have developed computer algorithms that can analyze facial features with impressive accuracy, enabling machines to recognize emotions and respond accordingly.

These algorithms work by breaking down facial images into smaller components, such as the position of eyebrows, intensity of eye movements, or curvature of the mouth.

By comparing these components against a database of known expressions, the algorithm can identify the emotion being conveyed.

The Real-Life Applications

Uncovering emotions based on facial characteristics has numerous real-life applications. Let’s explore a few of them:.

1. Psychology and Mental Health

In the field of psychology, understanding and recognizing emotions play a crucial role in therapy and mental health assessment.

By analyzing a person’s facial expressions, therapists and psychologists can gain valuable insights into their emotional state, aiding in the diagnosis and treatment of various conditions, such as depression, anxiety, and post-traumatic stress disorder (PTSD).

2. Marketing and Advertising

Marketers and advertisers carefully study consumer emotions to create effective campaigns.

Facial analysis can help businesses gauge customers’ responses to their products or advertisements, enabling them to tailor their marketing strategies accordingly. By understanding the emotions triggered by specific elements, marketers can improve consumer engagement and increase sales.

3. Human-Computer Interaction

Emotion recognition has immense potential in improving human-computer interaction. By understanding a user’s emotional state through facial analysis, computers and virtual assistants can adapt their responses to better meet users’ needs.

For example, a computer vision system can detect frustration on a user’s face and respond by providing additional assistance or simplifying the user interface.

4. Lie Detection

Microexpressions have gained popularity in law enforcement and security sectors as a tool for lie detection.

By closely monitoring facial expressions during interviews or interrogations, investigators can identify signs of deception, aiding in the pursuit of truth.

Challenges and Future Implications

Although the field of emotion recognition from facial characteristics has made significant progress, there are challenges to be addressed.

Cultural differences, varying gender expressions, and personal idiosyncrasies can affect the accuracy of these methods.

Despite these challenges, the future implications of emotion recognition are promising. As technology advances, algorithms will become more sophisticated, enabling even finer-grained emotion understanding.

This could lead to exciting developments in fields such as healthcare, education, entertainment, and more.

Conclusion

The ability to uncover emotions based on facial characteristics has wide-ranging implications in various fields.

Whether it is understanding consumer behavior, improving mental health diagnosis, or enhancing human-computer interactions, this research opens up exciting possibilities for a more emotionally intelligent future. As technology continues to evolve, we are likely to witness even more sophisticated methods for detecting and understanding human emotions.